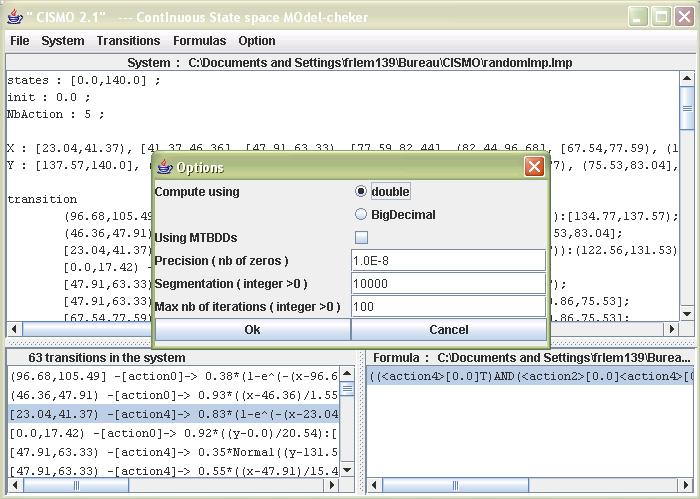

Options

Compute using...

You can compute the probabilities using eigther regular double numbers, or a more precise BigDecimal numbers. Computing with regular double numbers is very fast and highly optimized. It does, however introduce a non negligible error in the calculation, especially during additions and substractions. To reduce the errors to the minimum, the usage of BigDecimal numbers, encoded on 64 bits instead of 32 bits provide a greater accuracy, at the expense of a lot slower computation.

Using MTBDDs

Check this box to use the multiterminal binary decision diagrams. This can result in up to 20% increase of performance during verification. Unfortunately, it currently consumes an insane ammount of memory due to a bug in the garbage collection. It also is only available on Linux for now.

Precision (nb of zeros)

The smallest unit representable by the system. Most of the time, this is equals to 1 multiplied by a power of ten equals to minus the number of decimals to keep.

Segmentation (integer > 0)

When analysing the functions attached to the transitions, defines in how much segments to split the states interval to calculate the zeros. The higher the number, the less likely zeros will not be detected, but the slower the verification will be.

Max nb of iterations (integer > 0)

The maximum number of loops when testing a possible zero in a function.